MCP and the Future of AI Tool Integration

The Model Context Protocol is quietly becoming the USB-C of AI tooling — a universal standard that lets any model talk to any tool. Here's why it matters and how it's already changing my workflow.

MCP and the Future of AI Tool Integration

If you've spent any time building with AI tools over the past year, you've felt the friction: every model has its own way of calling functions, every IDE plugin speaks a slightly different dialect, and integrating a new tool means writing yet another adapter. The Model Context Protocol (MCP), originally introduced by Anthropic in late 2024, is changing that — and after using it extensively over the past few months, I'm convinced it's the most important piece of AI infrastructure most developers aren't paying attention to yet.

What Is MCP, Really?

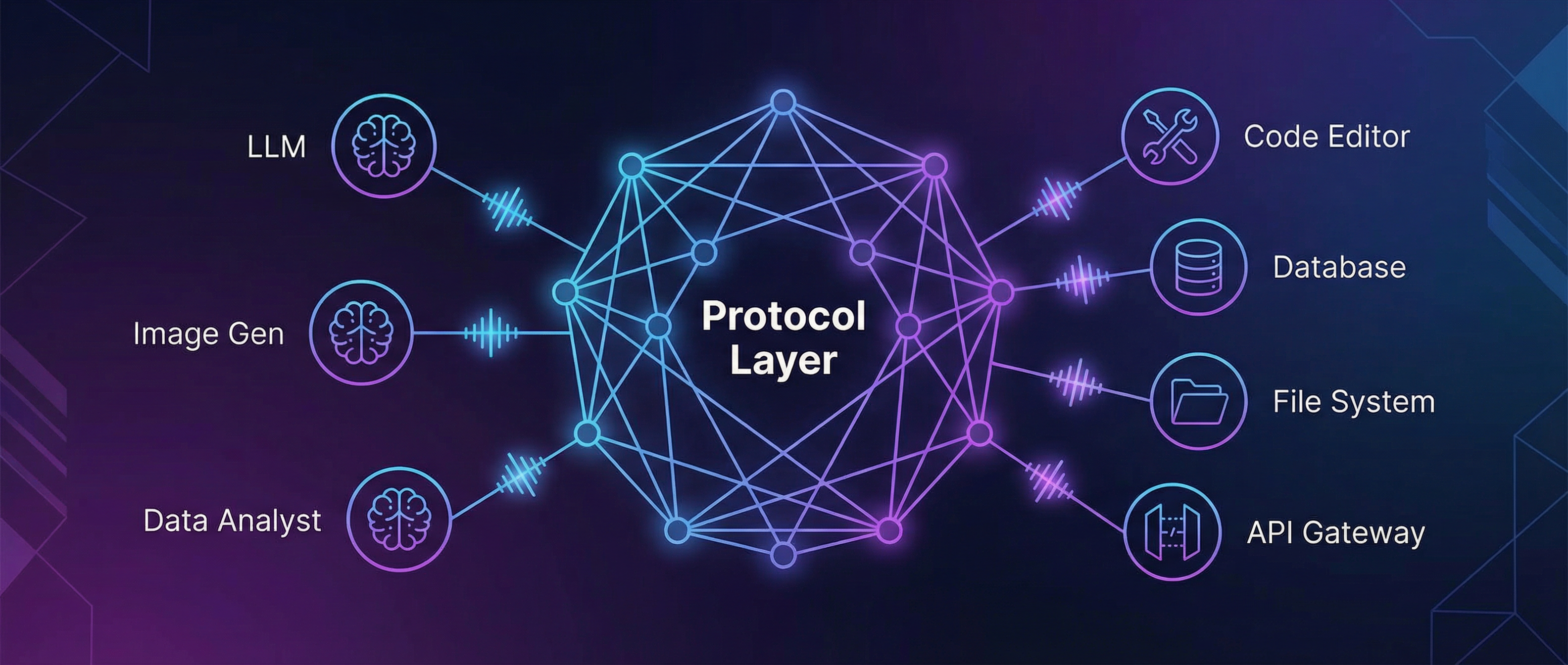

At its core, MCP is an open standard that defines how AI models interact with external tools, data sources, and services. Think of it as a universal interface layer: instead of building custom integrations for every model-tool combination, you build one MCP server for your tool, and any MCP-compatible client can use it.

The protocol defines three main primitives:

- Tools: Functions the model can invoke (query a database, create a file, call an API)

- Resources: Data the model can read (files, documentation, live data feeds)

- Prompts: Reusable templates for common interactions

{

"name": "query_database",

"description": "Execute a read-only SQL query",

"inputSchema": {

"type": "object",

"properties": {

"query": { "type": "string" },

"database": { "type": "string", "enum": ["production", "analytics"] }

}

}

}

Why This Matters Now

Before MCP, every AI-powered tool I used had its own integration story. My code editor had one way of giving context to the model. My CLI tools had another. My custom scripts were entirely bespoke. The result was a fragmented experience where switching between models or tools meant rewriting glue code.

What MCP provides is composability. I now run a handful of MCP servers — one for my project's codebase, one connected to our internal documentation, one for database access — and any MCP-compatible client can leverage all of them simultaneously. When Cursor, Claude Desktop, or any other tool adopts MCP, they get access to my entire toolchain without me writing a single new line of integration code.

My Experience: From Skeptic to Convert

I'll be honest — when MCP was first announced, I thought it was another protocol that would die in committee. We've seen plenty of "universal standards" in tech that never gained traction. But three things changed my mind:

1. Adoption velocity. Within months of the spec being published, Cursor, Windsurf, Claude Desktop, and a growing list of tools adopted it. The community started building MCP servers for everything — GitHub, Postgres, Slack, Jira, file systems.

2. The developer experience is genuinely good. Writing an MCP server is straightforward. The SDK handles the transport layer, and you focus on defining your tools. I built a custom MCP server for our internal deployment pipeline in an afternoon.

3. It solved a real pain point in my workflow. At GitLab, I spent years thinking about developer tool integration and the overhead of maintaining plugins across multiple platforms. MCP is the answer to a problem I lived with for a long time — and it's elegant in its simplicity.

The Competitive Landscape

MCP isn't the only game in town. OpenAI has its function calling spec, Google has extensions, and various frameworks have their own tool-use abstractions. But MCP's advantage is that it's model-agnostic and open. You build one server, and it works with Claude, GPT, Gemini, or a local model running through Ollama.

That said, the ecosystem is still early. Discoverability of MCP servers is limited, error handling across implementations isn't consistent, and there's no centralized registry yet. These are solvable problems, but they're real today.

Where This Is Going

The trajectory is clear: MCP or something very much like it will become the standard interface between AI models and the real world. The same way REST APIs standardized how services communicate, MCP is standardizing how AI systems interact with tools.

For developers, the implication is straightforward: if you're building any tool or service that you want AI systems to interact with, invest in an MCP server now. The protocol is stable enough to build on, the ecosystem is growing fast, and the payoff — your tool being instantly usable by every MCP-compatible AI client — is significant.

I've already started converting my personal automation scripts into MCP servers. It's a small investment that pays off every time a new AI tool adopts the protocol.

MCP is open source and the spec is available at modelcontextprotocol.io. If you're building developer tools, this is worth your weekend.